Recurrent neural networks

Recurrent neural networks is a type of artificial neural networks that is used to process serial data. They differ from other types of neural networks in that they have feedbacks that store information about previous states.

Recurrent neural networks is a type of artificial neural networks that is used to process serial data. They differ from other types of neural networks in that they have feedbacks that store information about previous states.

Recurrent neural networks are suitable for working with time series, texts and other data that have an internal structure. For example, they are used for speech recognition, machine translation, and text sentiment analysis.

The main advantage of recurrent neural networks is that they process data of different lengths. This means that they can work with texts of different lengths or time series with different numbers of points.

However, recurrent neural networks also have difficulties. They require more computational resources and time to train than other types of neural networks. In addition, they suffer from the gradient decay problem, where gradients become small and make training difficult.

Despite these complexities, recurrent neural networks are a cool tool for working with serial data and are used in all sorts of areas.

The content of the article:

- The principle of operation of recurrent neural networks

- What is LSTM?

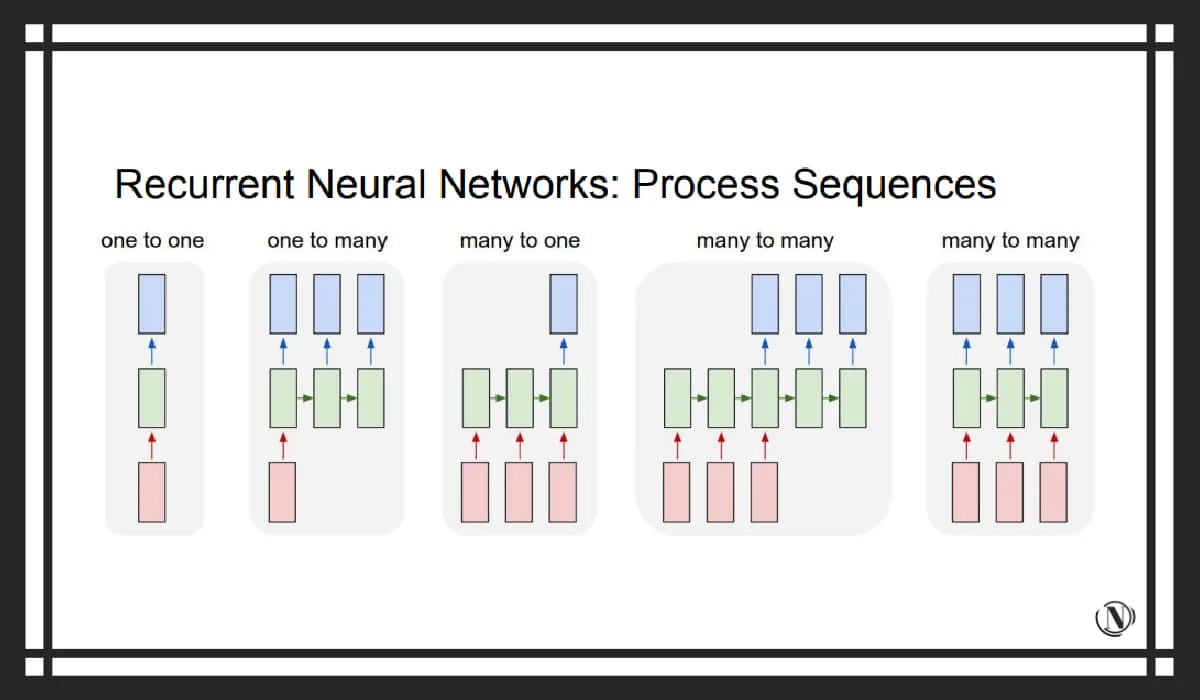

- Types of Recurrent Neural Networks

- Architecture of recurrent neural networks

- Training of recurrent neural networks

- Examples of using recurrent neural networks in business?

- What companies use recurrent neural networks?

- Advantages and limitations of recurrent neural networks

- The Future of Recurrent Neural Networks

- Conclusion

- FAQ

The principle of operation of recurrent neural networks

Recurrent Neural Networks (RNN) is a type of artificial neural networks that is used to process serial data. RNNs differ from other types of neural networks in that they have feedback that retains information about previous states.

Unlike other neural networks that process each input element independently, recurrent neural networks use information about previous states to process the current input element. This is how RNN takes into account the context and processes data sequences.

A progressive way to implement recurrent neural networks is the use of memory cells.

Memory cell is an element of a neural network that stores information about previous states. It takes the current element and the previous state of the memory cell as input and calculates a new state based on these data.

A popular type of memory cells is LSTM (Long Short-Term Memory). LSTM has three input gates: an input gate, an oblivious gate, and an output gate. These gates control the flow of information within a memory cell, as the LSTM stores information about long-term dependencies between sequence elements.

What is LSTM?

LSTM (Long Short-Term Memory) is a type of recurrent neural network designed specifically to handle long-term dependencies between sequence elements. The LSTM has a complex architecture with three entry gates: an entry gate, an oblivious gate, and an exit gate. These gates control the flow of information within a memory cell, as the LSTM stores information about long-term dependencies between sequence elements.

LSTM is designed to solve the problem of gradient decay that occurs when training recurrent neural networks. It allows the network to handle long-term dependencies between elements of a sequence and is used in all sorts of areas such as speech recognition, machine translation, and text sentiment analysis.

What other types of recurrent neural networks are there

There are a lot of recurrent neural networks. Some of them include:

- Network with long-term and short-term memory (LSTM)

- Managed Recurrent Unit (GRU)

- Fully recurrent network

- recursive network

- Hopfield Neural Network

- Bidirectional Associative Memory (BAM)

- Elman and Jordan networks

- echo networks

- Neural history compressor.

Each of these types has its own characteristics and is used in various tasks.

Architecture of recurrent neural networks

The architecture of recurrent neural networks (RNN) differs from the architecture of other neural networks in the presence of feedback. RNN links store information about previous states and use it to process the current input element.

The main element of the RNN is the memory cell. The cell receives the current element and the previous state of the memory cell as input and calculates a new state based on these data. Memory cells come in different types, depending on the RNN architecture used.

- A common type of memory cell is the LSTM (Long Short-Term Memory). It has three entrance gates: an entrance gate, a forgetting gate, and an exit gate. These gates control the flow of information within a memory cell, as the LSTM stores information about long-term dependencies between sequence elements.

- Another popular memory cell type is the GRU (Gated Recurrent Unit). It has two entrance gates: a renewing gate and a discarding gate. These gates control the flow of information within a memory cell, so the GRU keeps information about long-term dependencies between sequence elements.

Training of recurrent neural networks

Recurrent neural networks (RNN) are trained using the backpropagation algorithm in time (Backpropagation Through Time, BPTT). This algorithm is similar to the standard backpropagation algorithm that is used to train other neural networks, but with one major difference: it takes into account the temporal structure of the data.

During training, an RNN is presented with a sequence of inputs and a corresponding sequence of desired outputs. The network processes the input and calculates its output. The error between the desired and actual network output is then calculated.

This error is then propagated back in time to compute the gradients for each network parameter. These gradients are used to update the network parameters using an optimization algorithm such as stochastic gradient descent.

However, RNN training is hampered by the problem of gradient fading or bursting. This means that the gradients become small or large, making training difficult. Techniques are used to solve this problem, such as limiting the gradient norm or using advanced memory cell types such as LSTM or GRU.

Examples of using recurrent neural networks in business?

Recurrent neural networks (RNN) are used in business to solve problems related to the processing of serial data. Some examples of RNN applications include:

- Time series forecasting: RNNs are used to predict time series such as sales, stock prices, and weather. This can help companies make informed decisions and plan activities.

- Text Sentiment Analysis: RNN is used to analyze the sentiment of the text to determine if the review is positive or negative. This can help companies improve their product or service and increase customer satisfaction.

- Machine translate: RNNs are used for machine translation of text from one language to another. This can help companies expand the market and communicate with customers in different languages.

- Speech recognition: RNNs are used for speech recognition and voice-to-text conversion. This can help companies improve voice interfaces and enhance customer experience.

These are examples of how RNNs are used to solve everyday problems. RNNs are in-demand tools for improving products and services and increasing operational efficiency.

What companies use recurrent neural networks?

Recurrent neural networks are used by companies to solve all sorts of problems. For example:

- Google uses recurrent neural networks for speech recognition in products such as Google Assistant and Google Translate. Google also uses them to machine translate text from one language to another.

- Amazon uses recurrent neural networks to analyze the sentiment of product reviews on its website. This helps them improve the quality of their products and services.

- Netflix uses recurrent neural networks to predict user interests and recommend movies and TV shows.

These are three examples of how aggregator companies use recurrent neural networks in their work.

Advantages and limitations of recurrent neural networks

Recurrent neural networks (RNNs) have a number of advantages over other types of neural networks. The main advantage of RNN is the ability to process serial data. Due to the presence of feedbacks, RNNs store information about previous states and use it to process the current input element. So RNNs take into account the context and process data sequences.

However, RNN also has a number of limitations. For example, the problem of fading or exploding a gradient. This means that gradients can get small or large, making training difficult. Techniques are used to solve this problem, such as limiting the gradient norm or using advanced memory cell types such as LSTM or GRU.

In addition, RNN training is computationally expensive due to the need to propagate the error back in time. This makes it difficult to use RNNs to process long data sequences.

The Future of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are emerging areas of research in artificial intelligence. In the future, it is expected that RNNs will be used to solve extremely complex problems related to the processing of serial data.

The main direction of development of RNNs is to improve their ability to handle long-term dependencies between sequence elements. This can be achieved through the use of advanced memory cell types such as LSTM or GRU, or through new architectural solutions.

In addition, new RNN training methods are expected to be developed that will allow them to process large amounts of data quickly. This may include the use of parallel computing and distributed learning.

The future of RNN looks promising. They will continue to play a significant role in the field of artificial intelligence and will be used to solve extremely complex problems.

Conclusion

In this article, we have discussed various aspects of Recurrent Neural Networks (RNNs). Considered their principle of operation, architecture, application, advantages and limitations, as well as future development.

RNN is an essential tool for processing serial data. Recurrent neural networks have feedback that allows them to store information about previous states and use it to process the current input element. This allows them to be context aware and process sequences of data.

However, RNNs also have a number of limitations, such as the gradient decay or explosion problem and the high computational complexity of training. In the future, it is expected that new methods and architectures will be developed that will allow RNNs to quickly solve extremely complex problems.

FAQ

Q: What are recurrent neural networks?

A: Recurrent Neural Networks (RNN) is a type of artificial neural network that is used to process serial data. RNNs differ from other types of neural networks in that they have feedback that allows them to retain information about previous states.

Q: What are the advantages of recurrent neural networks?

The main advantage of recurrent neural networks is the ability to process sequential data. Due to the presence of feedbacks, RNNs can store information about previous states and use it to process the current input element. This allows them to be context aware and process sequences of data.

What are the limitations of recurrent neural networks?

One of the limitations of recurrent neural networks is the problem of gradient fading or bursting. This means that gradients can get small or large, making training difficult. Also, RNN training can be computationally expensive due to the need to propagate the error back in time.

What are some examples of using recurrent neural networks in business?

Recurrent neural networks are used in business to solve all sorts of problems. For example, for time series forecasting, text sentiment analysis, machine translation, and speech recognition. It can help companies improve products and services and improve work efficiency.

Reading this article:

Thanks for reading: ✔️ SEO HELPER | NICOLA.TOP