How to make robots.txt for wordpress

Hello everyone, today I will tell how to make robots.txt for wordpress. Creating a robots.txt file it is necessary first of all to indicate to search engine robots which sections of your site the robot can bypass and index and which not.

Hello everyone, today I will tell how to make robots.txt for wordpress. Creating a robots.txt file it is necessary first of all to indicate to search engine robots which sections of your site the robot can bypass and index and which not.

In fact, this service file is needed to indicate to the search bot which sections of the site will be indexed in search engines, and which search robot should skip. But, you need to understand that search engine robots can ignore the prohibition directive and index the section. However, such cases are quite rare.

The content of the article:

- Creating a robots.txt file

- Decoding the robots.txt file (directives)

- An example of an extended Robots.txt file for my site

Robots.txt for WordPress - how to do it?

1. Create a text file called robots in .txt format. Created with a regular text editor.

2. Next, enter the following information in this file:

User-agent: Yandex Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-comments Disallow: /wp-content/plugins Disallow: /wp-content/themes Disallow: /wp-content/cache Disallow: / wp-login.php Disallow: /wp-register.php Disallow: */trackback Disallow: */feed Disallow: /cgi-bin Disallow: /tmp/ Disallow: *?s= User-agent: * Disallow: /wp- admin Disallow: /wp-includes Disallow: /wp-comments Disallow: /wp-content/plugins Disallow: /wp-content/themes Disallow: /wp-content/cache Disallow: /wp-login.php Disallow: /wp- register.php Disallow: */trackback Disallow: */feed Disallow: /cgi-bin Disallow: /tmp/ Disallow: *?s= Host: site.com Sitemap: http://site.com/sitemap.xml

3. Replace the directive Host: site.com (site.com) with the name of your site.

4. In the Sitemap directive, specify: the full path to your sitemap. The URL may differ depending on the plugin that generates the map on your site.

5. Save and upload the robots.txt file to the root folder of your site. You can do this with any FTP client.

6. Great, your file is ready and functioning. Now, before crawling the site, search engine robots will first access this service file.

Decoding the robots.txt file (directives)

Now, let's look into the Robots.txt file in more detail. What and why did we add to the robots.txt file.

user-agent - directive, necessary to specify the name of the search robot. With it, you can prohibit or allow search robots to visit your site. For example:

We forbid the Yandex robot to view the cache folder:

User agent: Yandex

Disallow: /wp-content/cache

We allow the Bing robot to browse the themes folder (with site themes):

User agent: bingbot

Allow: /wp-content/themes

In order for our rules to act uniformly for all search engines. Use the directive: User-agent: *

Allow and Disallow - allowing and forbidding directive. Examples:

Allow the Yandex bot to view the wp-admin folder:

User agent: Yandex

Allow: /wp-admin

Prevent all bots from viewing the wp-content folder:

User-agent: *

Disallow: /wp-content

This robots.txt does not use the Allow directive, what we do not prohibit with the Disallow directive will be allowed by default.

- Host - directive, needed in order to specify the main mirror of the site, it will be indexed by the robot. Everything third-party will not be dispensed with.

- Sitemap - here, we indicate the path to the sitemap. Please note that Sitemap is a very important tool for website promotion! Its presence is essential, do not forget about it.

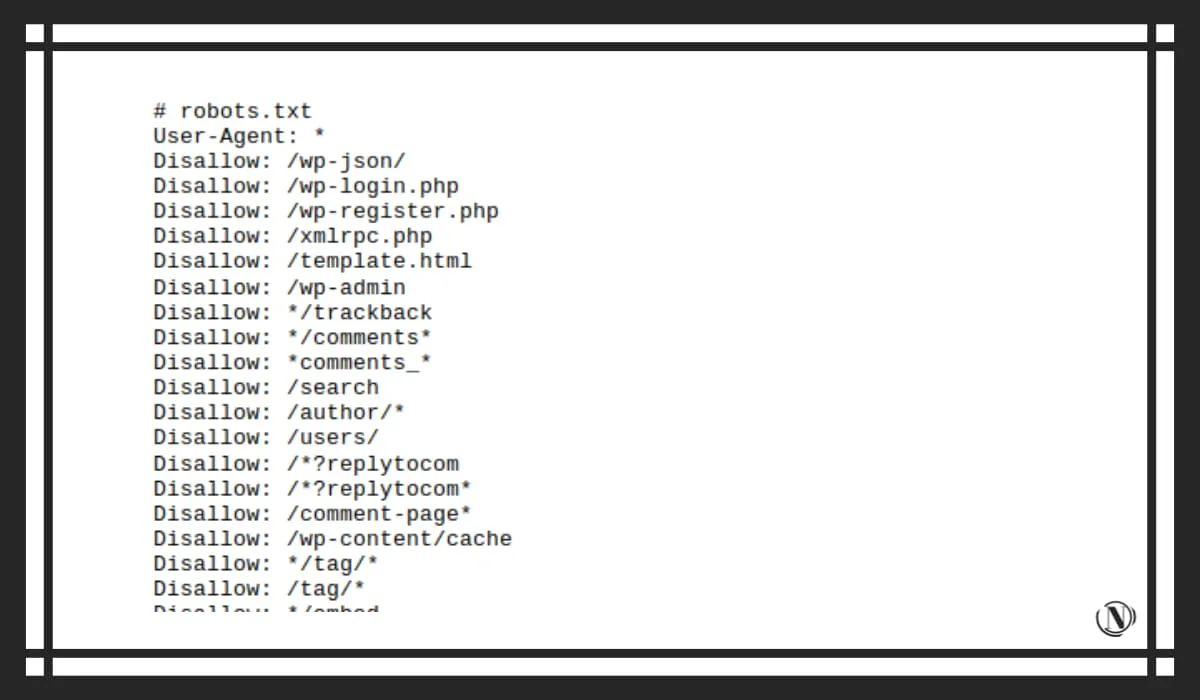

An example of an extended Robots.txt file for my site

Now let's take a look at my site's robots file. Please note that I edit the prohibiting or allowing directives according to my needs. If you decide to use my example, be sure to review the file and remove those directives that you do not need.

Let's take the robots.txt file of this site:

# robots.txt User-Agent: * Disallow: /wp-json/ # technical information Disallow: /wp-login.php # security Disallow: /wp-register.php # security Disallow: /xmlrpc.php # security, WordPress API file Disallow: / template.html #technical information Disallow: /wp-admin #security Disallow: */trackback #duplicates, comments Disallow: */comments* #duplicates, comments Disallow: *comments_* #duplicates, comments Disallow: /search #website search result pages Disallow: /author /* # author and user pages Disallow: /users/ Disallow: /*?replytocom # snotty index Disallow: /*?replytocom* Disallow: /comment-page* # comment pages Disallow: /wp-content/cache #cache folder Disallow: */tag /* #tags - if appropriate Disallow: /tag/* Disallow: */embed$ #all embeds Disallow: */?s=* #search Disallow: */?p=* #search Disallow: */?x=* #search Disallow: */ ?xs_review=* #pages post editor, visual preview Disallow: /?page_id=* #pages page editor, visual preview Disallow: */feed #all feeds and rss feeds Disallow: */?feed Disallow: */rss Disallow: *.php #technical files Disallow: /ads.txt # technical ad pages, if appropriate Disallow: */amp # all amp pages - if using technology, do not disable. Disallow: */amp? Disallow: */amp/ Disallow: */?amp* Disallow: */stylesheet #some stylesheets that popped up Disallow: */stylesheet* Disallow: /?customize_changeset_uuid= #technical duplicates of caching and compression plugin Disallow: */?customize_changeset_uuid*customize_autosaved =on

# specify to the bots the files that are needed for the correct display of the site pages. Allow: /wp-content/uploads/ Allow: /wp-includes Allow: /wp-content Allow: */uploads Allow: /*/*.js Allow: /*/*.css Allow: /wp-*.png Allow: /wp-*.jpg Allow: /wp-*.jpeg Allow: /wp-*.gif Allow: /wp-admin/admin-ajax.php

1TP31Permission for bots to view folders with images User-agent: Googlebot-Image Allow: /wp-content/uploads/ User-agent: Yandex-Images Allow: /wp-content/uploads/ User-agent: Mail.Ru-Images Allow: /wp-content/uploads/ User-agent: ia_archiver-Images Allow: /wp-content/uploads/ User-agent: Bingbot-Images Allow: /wp-content/uploads/

1TP31 Specify the main mirror and sitemap Host: https://nicola.top Sitemap: https://nicola.top/sitemap_index.xml

Conclusion

I have talked in sufficient detail about how to create robots.txt for WordPress. Please note that all changes made to this file will be visible after a while. You can see the most complete overview in the article on how to make robots.txt for a site on various CMS.

Edit directives depending on your needs. There is no need to mindlessly enter everything into this service file. Such irresponsible changes can lead to the loss and complete removal of important pages or sections of the site from the search. I hope this guide will be useful to you, be sure to leave comments with questions.

Reading this article:

Thanks for reading: SEO HELPER | NICOLA.TOP